Artificial Intelligence (AI) is the way of making computing hardware and software think intelligently, in similarity to the manner humans use natural intelligence.

What is Artificial Intelligence?

The range of tasks that computers are capable of performing has increased rapidly since they were first created. The power of computer systems has been enhanced by humans in terms of their many working domains, growing speed, and decreasing size over time.

Artificial intelligence is a subfield of computer science that aims to build machines or computers that are as intelligent as people.

According to “John McCarthy," known as the father of artificial intelligence, AI is “the science and engineering of making intelligent machines, especially intelligent computer programs."

Artificial intelligence is a technique for teaching a computer, a robot that is controlled by a computer, or a piece of software to think intelligently, much as intelligent people do.

It is possible to create intelligent software and systems by first studying how the human brain works, as well as how people learn, make decisions and collaborate when attempting to solve a problem.

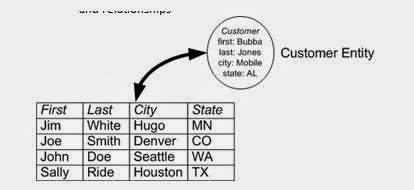

If given enough information, machines are capable of doing human-like actions. Consequently, knowledge engineering is crucial to artificial intelligence. In order to perform knowledge engineering, the relationship between objects and properties must be established. The methods used in artificial intelligence are listed below.

Therefore, an AI technique is a type of method applied to this set of available knowledge that organizes and uses that set as efficiently as possible:

- Easy modifiable to correct errors

- Useful in many ways even it is incomplete or to some degree inaccurate

- Understood and clear by its provider

- Having a clear purpose

1. Machine Learning (ML)

Applications that learn from experience and increase their prediction or decision-making accuracy over time are the focus of machine learning.

A subset of machine learning called "Deep Learning" uses artificial neural networks for predictive analysis. Machine learning uses a variety of algorithms, including reinforcement learning, supervised learning, and unsupervised learning. When learning unsupervised, the algorithm does not need classified data to make decisions on its own. In supervised learning, a function that includes a set of an input object and the intended output is inferred from the training data. Machines utilise reinforcement learning to determine the best possibility that needs to be considered and to take the appropriate actions to improve the reward.

2. Natural Language Processing (NLP)

Building machines that comprehend and react to text or voice data and answer with text or speech of their own in a manner akin to that of humans is the goal of natural language processing.

Computers are programmed to process natural languages in the context of interactions with human language. Natural Language Processing, which extracts meaning from human languages through machine learning, is a proven technique. In NLP, a machine records the audio of a person speaking. The dialogue is then transformed from audio to text, after which the text is processed to turn the data into audio. The machine then responds to people using audio.

Applications of NLP can be found in Interactive Voice Response (IVR) systems used in call centres, in language translators like Google Translate, and in word processors that check the correctness of syntax in text, like Microsoft Word.

However, due to the rules required in communicating using natural language, which are challenging for computers to understand, natural language processing is challenging due to the nature of human languages. In order to translate unstructured data from human languages into a format that the computer can understand, NLP employs algorithms to recognise and abstract the rules of natural languages. Additionally, NLP is used in content improvement tools such apps for paraphrasing, which enhance the readability of difficult text.

3. Automation and Robotics

Expert systems or applications that are able to perform tasks given by a human.

They have sensors to pick up information from the outside world, such as temperature, movement, sound, heat, pressure, and light, which is processed so they can act intelligently and learn from their mistakes.

The goal of automation is to have machines perform boring, repetitive jobs, increasing productivity and delivering more effective, efficient, and affordable results. In order to automate processes, many businesses use machine learning, neural networks, and graphs. By leveraging CAPTCHA technology, such automation can prevent fraud problems during online financial transactions. Robotic process automation is designed to carry out high volume, repetitive jobs while being flexible enough to adapt to changing conditions.

4. Machine Vision (MV)

Is the technology and procedures used to deliver imaging-based automatic inspection and analysis for applications like automatic inspection, process control, and robot guiding, typically in the industrial setting

Machines are capable of collecting and analysing visual data. In this case, cameras are utilised to record visual data, which is then processed using digital signal processing once the image is converted from analogue to digital. The data that is produced is then fed into a computer. Sensitivity—the ability of the machine to recognise weak impulses—and resolution—the extent to which it can distinguish between objects—are two essential components of machine vision. Machine vision is used in a variety of applications, including pattern recognition, medical picture analysis, and signature detection.